At work, a big part of my professional development now involves wrestling with the problems of AI and how that will change what I need to help people with when they do research. I watched a talk last week called “The AI Dilemma” from the Center for Humane Technology, an organization that I have followed since the wake of the Cambridge Analytica scandal. I think it is worth your time watching, so if you are folding laundry or doing another low-attention household task, now is the perfect time to press play.

In the talk, they framed the deployment of AI much like the deployment of social media — a fight that they say “we [humans] lost” — and I found that to be a very accurate way to put what happened. I also found it to be a great way to improve AI literacy, as most people do not realize that social media algorithms and generative AI are two different implementations of a broader AI bucket.

Social media has helped disseminate information about polytheism and paganism, and it did provide a space for many early communities to connect … before the attention algorithms started working against us to twist and fragment people apart — or, social media started with us Millennials having fun poking friends on college-access-only Facebook and seems to have ended with democratic crises and deeply fractured and polarized societies too dysfunctional to tackle the thorny and hard issues of our times. My analysis of paganism and polytheism is that much of the positive impact on the dissemination of our information is just vibes, as the shift onto social media withered and destroyed many in-person networks that were not already sufficiently large. We would have grown more slowly in a different type of Internet environment, but it might have been better for us. I say this as someone with an avid Zoom social life.

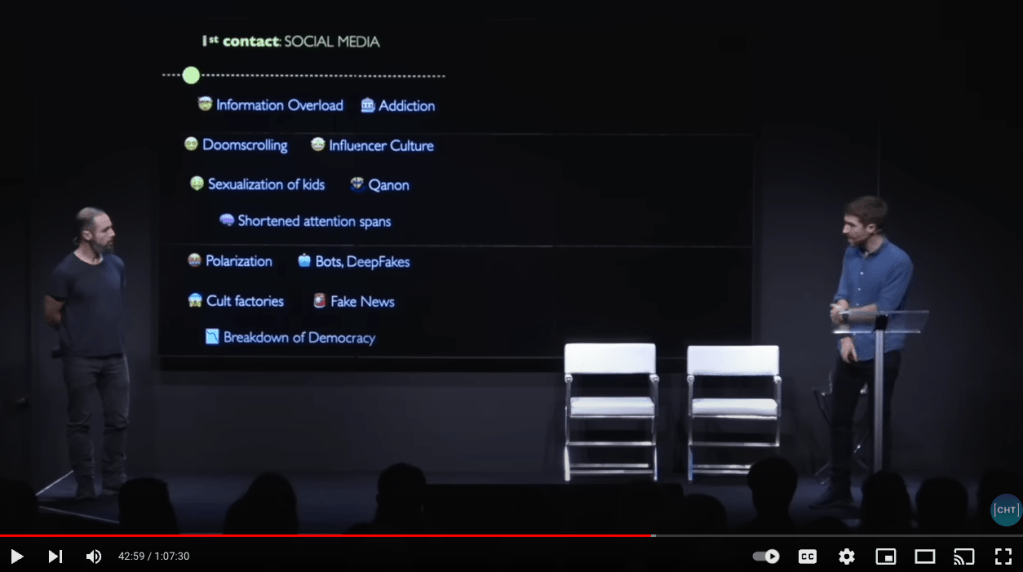

Working against the “drive to the bottom” of social media attention algorithms requires a lot of mental effort, ranging from the low effort of keeping an Instagram feed on-point to be restorative (my private Instagram shows me cats and inspirational stories about women and breathing exercises) to the medium effort of keeping YouTube productive (such as the example I like to give of searching for decluttering content and ending up watching videos of extreme minimalists agonizing over whether they need to declutter their final underwear) to the high effort of the herd-of-cats TikTok algorithm (like a friend of mine who created an account for art content and ended up being served a mix of 4B and BookTok). None of us can be on 100% of the time, and these tools have been trained specifically to be responsive to the feedback that we give them to keep us attentive, typically through more and more extreme and siloed content. The CHT’s presentation accurately described the harms.

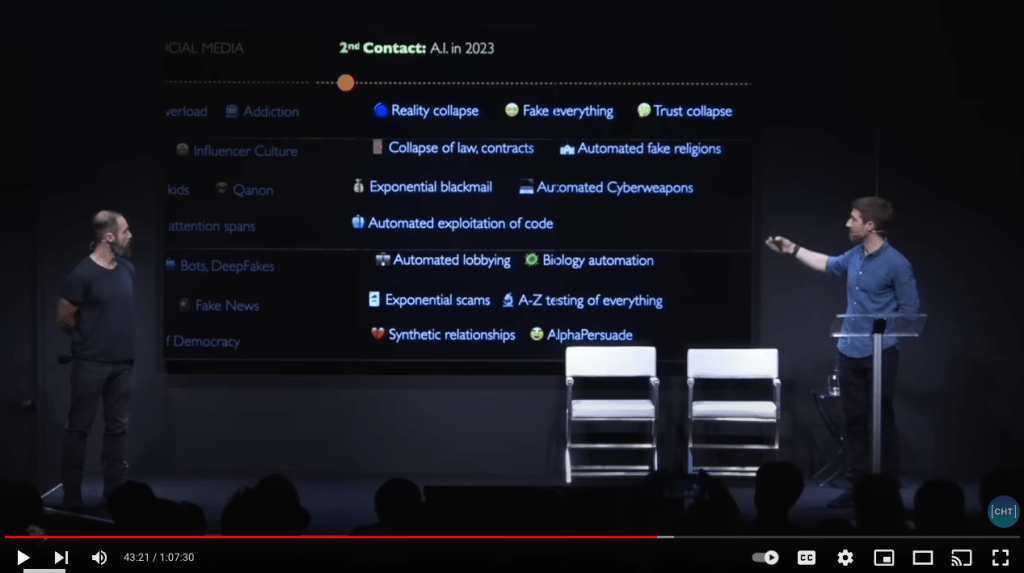

The new types of AI may be similarly disruptive. The CHT frames generative AI as doing for intimacy what the social media AI did with our attention. (You shunt people into silos and destroy everyone’s trust for others, and then you provide an AI tool to each person that can be our special friend. As the presenters say, which AI becomes our close companion? What gets to know us so well that it can play our psychology like an instrument?) Currently, there are no safeguards, and there is no mindful approach to actually fitting the capabilities of the technology to moral and ethical frameworks. Even the applications of it are being done without looping in all stakeholders to ensure that the product is good, as is happening in AI literature review tools, which IMO are terrible unless your field is less than 15 years old because it does not understand analog resources, but tons of grad students are using them now anyway. This goes beyond the frustrations in pagan and polytheist spaces about copyright and the data that the AI was trained on or the shift in creatives’ practices towards closed-access works and away from freely available content.

This is just as difficult to talk about as social media in some ways. This type of AI could be groundbreaking in decoding cetacean communication (we may be able to talk to sperm whales soon!!) because it has emergent capabilities based on data it has already trained on. It is decoding the Herculaneum texts. It has been groundbreaking in medical imaging. It can produce captions and screen reader glosses. But, overall, if we do approach it in the same way that we approached social media — which seems to be how we are doing things again — these are the CHT’s warnings:

I think it’s worth everyone’s time to take a look at that presentation and to think about how this will impact you in the next few years. I also think it is worth it for each of us — after all, the unexamined life is not worth living — to look at the negative impacts graphic they made of social media and to think about our own actions there and what we might do differently.

Not everything is in our power, but we most certainly can work on the things which are.

In the religious sphere, this impacts us in another way.

Many of us have, over the past few years, experimented with using AI tools for things related to religion. (Sometimes, it has been fun. I, too, have had ChatGPT write sonnets about Sosipatra.) Earlier this spring, I spent about an hour querying an AI tool — Bing, if you are curious — because I wanted to feed it several leading questions about the Chaldean Oracles to prime the AI to generate some options for chants to sing that were thematically attuned to the style of the Chaldean Oracles and which could work in my daily rituals.

I asked it for both household worship chants and chants for specific deities.

Most of what it produced was inappropriate.

Out of all of the results it generated, one chant was passable, and I made minor edits to it to ensure that I could use it in the setting I needed. This prevented me from needing to spend several years learning Ancient Greek (hey, I’m not even done with the Modern Greek Duolingo yet), which was a benefit to me. However, it involved wading through a probability graph that had set me up to fail.

Others have commented that AI output often seems soulless, as if it is missing something. I talked to a polytheist friend soon after generating the output. During the conversation, we agreed that the AI isn’t “missing” something. It is trained on a dataset that includes a lot of deeply impious information attempting to describe the Gods. Most of the open web data and published data that it consumed believes that Gods are stand-ins for natural phenomena or that they have a one-to-one correspondence to specific domains, like a creative writer I once spoke to who said she “already had a Goddess of Love” in her work and thus didn’t need another one. This is not how polytheism actually works.

So, despite being primed with the Chaldean Oracles, the chants it produced — just as all of the stuff it produces — come out of what it originally ate, from an unruly disorder. It was, said my friend, amazing that I was even able to generate one usable chant given how deep in the mud the AI is. And that’s not even getting into the ethics of how the AI was trained and on what.

That meant less to me given that the Chaldean Oracles were written very long ago, but it means a lot more in the context of most users’ religious queries, where they are asking for examples of rituals they can do for the New or Full Moon or for a specific deity and are unaware that there are entire books dedicated to lunar worship written by experienced polytheists and pagans, books that ground each ritual script in enough preliminaries to help a person along and that are a connection — however tenuously parasocial — with an actual person. The AI might even suggest practices that are inappropriate for a devotee to start on their own without training from an initiated specialist and/or elder. The only ones who stand to gain from using these AI tools for such queries are experienced persons who already know enough to evaluate and act on the information they are presented with, yet AI is now integrated into search tools for everyone.

In the spirit of being proactive about AI, that our conversations need to move from being reactive to being proactive. The Center for Humane Technology’s podcast and other content are a good place to start for building that background awareness.

And finally, this — which also came up in the conversation with a friend —: AI art will never be the same as creating the art oneself or getting it from a polytheistic artist. Or, in the words of Proclus in Book 4 of his Parmenides commentary at almost-852:

Suppose someone had seen Athena herself such as she is portrayed by Homer […]. If, after encountering this vision, a man should wish to paint a picture of the Athena he had seen and should do so; and another man who had seen the Athena of Phidias, presumably in the same posture, should also want to put her figure into a picture and should do so, their pictures would seem to superficial observers to differ not at all; but the one made by the artist who had seen the goddess will make a special impression, whereas that which copies the mental statue will carry only a frigid likeness, since it is the picture of a lifeless object.

Wow, reading this is disturbing on many levels – I have read the news stories about generative AI of course, but I guess in my little hermit bubble it never occurred to me that polytheists would be using it for things like creating rituals. This to me seems like another example of the “tyranny of convenience” – just because something is easier and/or faster, does not make it superior. In fact, it often makes it more harmful. Religious devotion SHOULD take effort, and time. It’s not about shortcuts, and one certainly shouldn’t be outsourcing that effort to something that has no actual understanding of a devotional mindset. Not just because the results will be questionable, but because one misses out on the *process* of learning and experimenting and developing one’s own practice and knowledge. I mean, we could all be programming a bot to scroll prayers 24/7 but is that worship? Does it have the same effect on our souls? Does it foster deep relationships with the deities involved? One wonders what these folks think the actual point is of doing any of this.

LikeLiked by 1 person

I’m trying to find what the sensible path is with AI, and I think it really comes down to whether the use of it supports/enhances the goal/mission (piety) or detracts from it. In the reorg I’ve had at work, we’ve been asking similar questions about where the new handoffs are between roles to make sure our program is on track. I think AI should be avoided when it is about convenience or about taking steep foundational learning shortcuts, as that will always come back to bite someone in the end. If there were an AI trained properly on a pious dataset, it could (as I think I mentioned towards the end) stand to benefit people who already know what they’re doing. With newer people, there’s also the issue of the AI queries replacing the importance of connecting with people who know what they’re doing. The examples I gave of specific research projects like cetacean communication showcase what it looks like to use the tool properly. The goal cannot be achieved at all without language-oriented machine learning.

LikeLike

I hope to be able to watch these soon (after the Congress is over this weekend, hopefully!)…

As you may be aware, I’m always looking for loopholes, and I wonder if there is one in the following circumstance (though I also entirely accept that I may just have a bias in this direction because I’ve had some results that have been really good, and certain other traditional devotional artists I know have commented that they agree): if the divine being in question has never been portrayed before, and one utilizes AI image generators to attempt to make something novel, then it isn’t really a “copy of a copy,” so to speak, since no matter what models the art might ultimately be derived from which the AI was trained on, it’s not a copy of someone else’s image of the divine being concerned since there are none to begin with. Where image-making is concerned, of course, one has to “start somewhere,” and no matter what, if one is producing something in humanoid form, then models for anatomy and such would have been in one’s background no matter what…?!?

There’s a subtle needle to be threaded with these questions, I think, and one that I’m not sure we can really state anything categorical about at this stage, beyond what amounts to canonizing one’s own aesthetics in many cases (as I’ve heard some people opine on these things elsewhere). But if we really accept that the Deities can appear anywhere and can use anything as potential theophanies, as most polytheists do and would, then I am not sure we can entirely discount this, anymore than we can discount anything that’s happened via the internet over the past 30 years which has had a numinous quality.

But, I don’t really know…I can only base things upon what any individual instance, the Deities-in-question’s divined preferences are, and the other things that we usually rely on in good discernment over such matters, generally speaking.

LikeLike

This kinda reminds me of a story that was in the news recently about a website having an AI Catholic priest until “Father Justin” (as he was called) started suggesting that it’s okay to use Gatorade for baptisms. He has since been defrocked

LikeLiked by 1 person

A question to ask in such a situation, though, might be: what if Fr. Justin had suggested a good wine or olive oil instead? While it may not fit the Catholic rubrics on these matters, what if one was being “baptized” into a Dionysian or Athenaian devotional context?

In other words: is the bigger issue not this type of semi-random and unexpected suggestion (what else is a kledones oracle than this?), but that what was suggested is something that seems ridiculous or even sacrilegious in some contexts because of its status as a commercial product? Is the issue, then, that Gatorade was the suggestion, and we’d feel similarly if it was Pepsi or 7-Up, or it’s just that it’s “not water” and that’s what the ritual calls for, and so this is a “silly AI [or kid, or trained chicken], that’s not what the rules say!” situation more than anything?

I remember the parish priest that I knew when I was younger telling us about “extraordinary ministers” of certain sacraments, and baptism was the one he focused on (as did most of my courses at Gonzaga when such questions arose), that absolutely any baptized Christian can baptize someone else in a situation of need. If someone was in their dying moments, there was no one else around, and wanted to be baptized, but they happened to be in a desert and the only liquid they had on-hand was a bottle of Gatorade, would it be all right then to use it for that purpose? There’s ultimately some water there, after all…?

LikeLike